IOPS (input/output operations per second) is the standard unit of measurement for the maximum number of reads and writes to non-contiguous storage locations of devices like hard disk drives (HDD), solid state drives (SSD), and storage area networks (SAN). IOPs is often measured with an open source network testing tool called an Iometer.

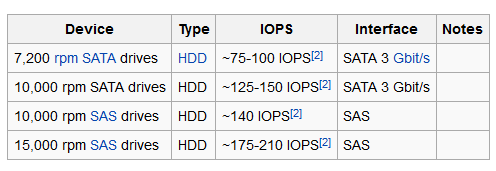

Some commonly accepted averages for random IO operations, calculated as 1/(seek + latency) = IOPS:

IOPS calculations

Every disk in your storage system has a maximum theoretical IOPS value that is based on a formula. Disk performance — and IOPS — is based on three key factors:

Some commonly accepted averages for random IO operations, calculated as 1/(seek + latency) = IOPS:

IOPS calculations

Every disk in your storage system has a maximum theoretical IOPS value that is based on a formula. Disk performance — and IOPS — is based on three key factors:

- Rotational speed (aka spindle speed). Measured in revolutions per minute (RPM), most disks you'll consider for enterprise storage rotate at speeds of 7,200, 10,000 or 15,000 RPM with the latter two being the most common. A higher rotational speed is associated with a higher performing disk. This value is not used directly in calculations, but it is highly important. The other three values depend heavily on the rotational speed, so I've included it for completeness.

- Average latency. The time it takes for the sector of the disk being accessed to rotate into position under a read/write head.

- Average seek time. The time (in ms) it takes for the hard drive's read/write head to position itself over the track being read or written. There are both read and write seek times; take the average of the two values.

No comments:

Post a Comment